Drivers Research In Motion Cameras

Covid-19 Impact on Global Motion Activated Cameras Industry Research Report 2020 Segmented by Major Market Players, Types, Applications and Countries Forecast to 2026 The research team projects that the Motion Activated Cameras market size will grow from XXX in 2019 to XXX by 2026, at an estimated CAGR of XX.

- SOLUTIONS

- DRIVE AV

Amazon.com has revealed plans to install AI-powered video cameras in its branded delivery vans, in a move that the world's largest e-commerce firm says would improve safety of both drivers and the. Phantom Camera Control (PCC) is the main software application that allows users to get the most out of Phantom cameras. While many newer Phantom models have On-Camera Control and remote control capabilities, PCC is the only place that controls every camera function on every Phantom camera model. Install drivers manually. If your webcam still doesn't work, you may need to download specific drivers from its manufacturer. If you have a laptop with a built-in webcam, the drivers will usually be on the laptop manufacturer's website (e.g., Acer, Lenovo). If it's a USB webcam, go to the camera manufacturer's website instead. Over the last 25 years, EthoVision XT has evolved from video tracking software into a software platform. Designed to be at the core of your lab, EthoVision XT offers integration of data and automation of behavioral experiments with any animal.

The NVIDIA® DriveWorks SDK is the foundation for all autonomous vehicle (AV) software development. It provides an extensive set of fundamental capabilities, including processing modules, tools and frameworks that are required for advanced AV development.

With the DriveWorks SDK, developers can begin innovating their own AV solution, instead of spending time developing basic low-level functionality. DriveWorks is modular, open, and readily customizable. Developers can use a single module within their own software stack to achieve a specific function, or use multiple modules to accomplish a higher-level objective.

DriveWorks is suited for the following:

- Integrate automotive sensors within your software.

- Accelerate image and Lidar data processing for AV algorithms.

- Interfacing with a vehicle’s ECUs and receiving their state

- Accelerate neural network inference for AV perception.

- Capture and post-process data from multiple sensors.

- Calibrate multiple sensors with precision.

- Track and predict a vehicle’s pose.

Details

The Sensor Abstraction Layer (SAL) provides:

List of supported sensors

- A unified interface to the vehicle’s sensors.

- Synchronized timestamping of sensor data.

- Ability to serialize the sensor data for recording.

- Ability to replay recorded data.

- Image signal processing acceleration via Xavier SoC ISP hardware engine.

All DriveWorks modules are compatible with the SAL, reducing the need for specialized sensor data processing. The SAL supports a diverse set of automotive sensors out of the box. Additional support can be added with the robust sensor plugin architecture.

These sensor plugins provide flexible options for interfacing new AV sensors with the SAL. Features including sensor lifecycle management, timestamp synchronization and sensor data replay can be realized with minimal development effort.

- Custom Camera Feature: DriveWorks enables external developers to add DriveWorks support for any image sensor using the GMSL interface on DRIVE AGX Developer Kit.

- Comprehensive Sensor Plugin Framework: This allows developers to bring new lidar, radar, IMU, GPS and CAN-based sensors into the DriveWorks SAL that are not natively supported by DriveWorks.

DriveWorks provides a wide array of optimized low-level image and point cloud processing modules for incoming sensor data to use in higher level perception, mapping, and planning algorithms. Selected modules can be seamlessly run and accelerated on different DRIVE AGX hardware engines (such as PVA or GPU), giving the developer options and control over their application. Image processing capabilities include feature detection and tracking, structure from motion, and rectification. Point cloud processing capabilities include lidar packet accumulation, registration and planar segmentation (and more). New and improved modules are delivered with each release.

Replay WebinarRead BlogThe VehicleIO module supports multiple production drive-by-wire backends to send commands to and receive status from the vehicle. In the event your drive-by-wire device is not supported out of the box, the VehicleIO Plugin framework enables easy integration with custom interfaces.

The DriveWorks Deep Neural Network (DNN) Framework can be used for loading and inferring TensorRT models that have either been provided in DRIVE Software or have been independently trained. The DNN Plugins module enables DNN models that are composed of layers that are not supported by TensorRT to benefit from the efficiency of TensorRT. The DNN Framework enables inference acceleration using integrated GPU (in Xavier SoC), discrete GPU (in DRIVE AGX Pegasus) or integrated DLA (Deep Learning Accelerator in Xavier SoC).

Replay WebinarIn order to improve developer productivity, DriveWorks provides an extensive set of tools, reference applications, and documentation including:

- Sensor Data Recording and Post-Recording Tools: A suite of tools to record, synchronize and playback the data captured from multiple sensors interfaced to the NVIDIA DRIVE™ AGX platform. Recorded data can be used as a high quality synchronized source for training and other development purposes.

If a sensor’s position or inherent properties deviate from nominal/assumed parameters, then any downstream processing may be faulty, whether it’s for self-driving or data collection. Calibration gives you a reliable foundation for building AV solutions with the assurance that they’ll achieve high fidelity, consistent, up-to-date sensor data. Calibration supports alignment of the vehicle’s camera, lidar, radar and Inertial Measurement Unit (IMU) sensors that are compatible with the DriveWorks Sensor Abstraction Layer.

Static Calibration Tools: Measures manufacturing variation for multiple AV sensors to a high degree of accuracy. Camera Calibration includes both extrinsic and intrinsic calibration, while the IMU Calibration Tool calibrates vehicle orientation with respect to the coordinate system.

Self-Calibration: Provides real-time compensation for environmental changes or mechanical stress on sensors caused by events such as changes in road gradient, tire pressure, vehicle passenger loading, and other minor changes. It corrects nominal calibration parameters (captured using the Static Calibration Tools) based on current sensor measurements in real-time, meaning that the algorithms are performant, safety-compliant, and optimized for the platform.

The DriveWorks Egomotion module uses a motion model to track and predict a vehicle’s pose. DriveWorks uses two types of motion models: an odometry-only model and, if an IMU is available, a model based on IMU and odometry.

Developing with DriveWorks

You will need:

- Host Development PC

- DriveWorks SDK, available through our NVIDIA DRIVE Developer Program for DRIVE AGX

Steps:

- Install DriveWorks through DRIVE Downloads and review the DriveWorks Reference Documentation.

- Try out the DriveWorks 'Hello World' tutorial and sample.

- Experiment with the other DriveWorks Samples and Tutorials. The Samples, which include source code, demonstrate key capabilities and are intended to be used as a starting point for developing and optimizing code. Key Use Cases include:

A new gesture control system could change the way we use in-car infotainment. Stuart Nathan reports

Drivers Research In Motion Cameras

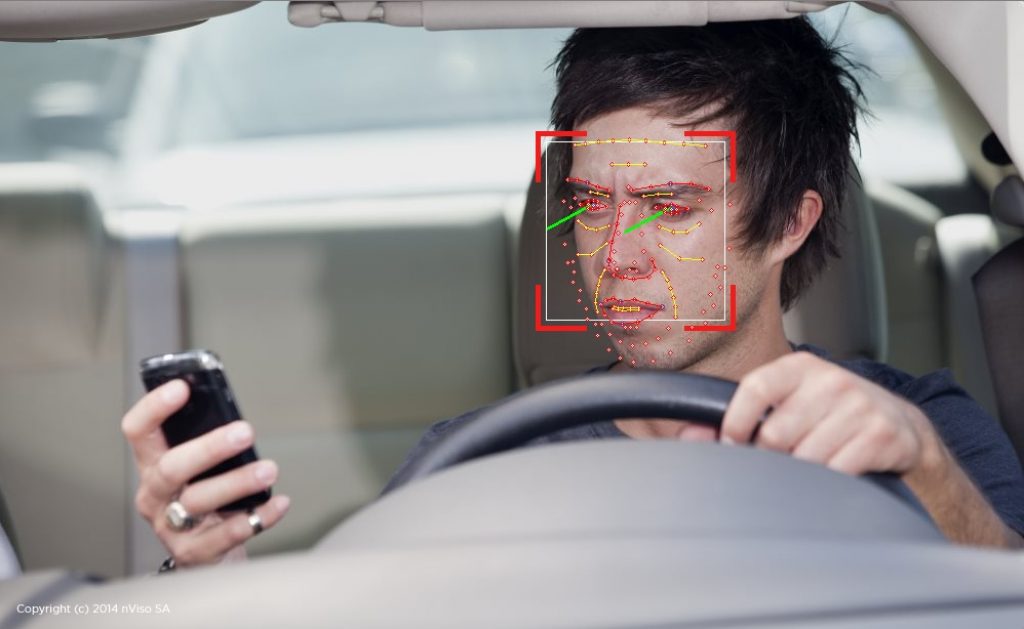

The best way to avoid being distracted while driving is simply to keep your eyes on the road. But human nature dictates that drivers want to do other things. So many people have become accustomed to the conveniences of smartphones – from being able to consult constantly-updated information to simply keeping in touch with people via social networking, while having your full library of music at your fingertips – that they are unwilling to give this up, even while in control of several tonnes of high-speed metal. The covergence of satnav with smartphones is another contributing factor.

So, as people are going to use infotainment while driving anyway, what’s the best way to allow them to do that safely? In-car audio specialist Harman, which provides systems for marques such as BMW, Audi, Jeep and Ferrari, is trying to come up with solutions to the problem.

Different car makers have different preferred options. For example, Volkswagen likes having touch-screens. But these have to be placed high in the dashboard so that the driver’s eye can flick easily and quickly from the screen to the road. The layout of the cabin means that the screen is therefore a considerable distance from the driver – further than he or she can reach by just taking a hand off the wheel. So as well as taking your eyes momentarily from the road, you have to lean forward a bit.

Audi, meanwhile, prefers a system with a screen that doesn’t respond to touch, and a separate controller placed near to the shift lever or gearstick, which can be operated simply by the driver dropping his or her hand downwards without otherwise changing position. This can be a twist controller – a knob that moves a cursor down a list of options on a viewscreen – or a touch-sensitive pad. For example, the destination for a satnav can be selected by drawing the initial letter of the placename onto the surface of the pad – p for paris, say – then using the twist knob to move through options. Although this generally entails looking away from the road for longer, the ability to remain in the driving position is seen as safer than having to move while looking at the screen.

One answer is to borrow a bit of technology that was introduced by the defence sector some decades ago: the head-up display (HUD). If it helps fighter pilots keeps their attention where it belongs, surely it can do the same for car drivers? The principle is straighforward: show the vital information in the same area that the driver is looking, on the windscreen itself. But in practice it’s not that simple: the information still has to be shown in such a way that it doesn’t obscure safety-critical parts of the windscreen, and there still needs to be a way for the driver to interact with the information – select items of importance, activate functions and so on – in such a way that it doesn’t break their concentration.

Harman’s answer to this is to combine a head-up display with gesture control. Currently only embodied as a demonstration unit and not yet incorporated into a car, the system projects a display onto an angled glass sheet placed on top of the dashboard – it functions something like an autocue, although in a car it would project onto the windscreen.

Drivers Research In Motion Cameras Surveillance

“To turn the music in the car down, the driver places his or her hand above a control knob by the gear selector and moves it up or down sharply. To turn it off, you make a gesture like patting an invisible dog, twice

The information on the display is integrated with displays on the dashboard and on the instrument console, but what is displayed on the HUD varies. When driving, for example, it will only display information vital to the task (that is, not phone calls). A camera in the front of the car works as a machine vision device, measuring the distance to the vehicle in front. Information such as direction arrows for satnav, the current speed limit of the road and the car’s speed are displayed ‘on the road’ between the front of the car and the vehicle in front.

The infotainment system incorporates a wi-fi hotspot so that it can stream traffic news and weather reports. This information is displayed on the dashboard display, but can be moved up to the HUD using gesture control. This uses a system similar to the Microsoft Kinect gaming console: sensors in the dashboard monitor the driver’s movements, recognising specific programmed gestures. For example, to turn the music in the car down, the driver places his or her hand above a control knob by the gear selector and moves it up or down sharply. To turn it off, you make a gesture like patting an invisible dog, twice.

When the car is stationary, functions such as the phone are enabled. If someone rings, an icon for the caller is displayed at the lower left-hand side of the HUD. To answer the call, you point to the icon. To dismiss it, you sweep it away. Similarly, information such as weather conditions can be swept up from the dashboard onto the HUD. The advantage of this, according to Harman, is that the driver’s eyes never have to refocus; all the vital information is displayed at a convenient distance, making use of peripheral vision but not requiring any change in attention.

The system also works as an alarm. For example, when the front camera system detects that the car is too close to the vehicle in front for the speed at which it’s travelling, it displays a warning signal on the HUD (the direction arrow for the satnav turns red) and it can also sound an audible alarm. Similar warnings can also be set if the car drifts out of its lane. Further sensors on the side of the car detect vehicles in the driver’s blind spot and provide another HUD alert.

Drivers Research In Motion Cameras Camera

Just to add a level of in-car comfort, the system also has a driver-facing camera equipped with face-recognition system, which can be programmed for regular drivers. This automatically sets up the car for a particular driver’s preferred settings, such as their seat position, steering wheel rake, ideal temperature and favoured radio stations.

Drivers Research In Motion Cameras Wireless

Harman is currently demonstrating the system at specialist exhibitions and to its regular customers, and is optimistic that it could be on the market within five years. ‘It’s a totally logical step for us,’ said vice-president for infotainment and lifestyle Michael Mauser. ‘The automotive sector is moving towards greater in-car connectivity and Harman is ideally positioned to make this technology part of the smart, responsive control systems.’

The system is, in fact, surprisingly intuitive, in much the same way that a touch-screen tablet turns out to be easy to use. Although it’s impossible to replicate the demands of driving in a static display, the visual feedback of moving display items from HUD to dashboard and back while making simple gestures is easy to understand and quickly becomes second nature, without the need to take your hands off the wheel for longer than it would take to change gear or switch on the headlights, for example.

Drivers Research In Motion Cameras Near Me

What springs to mind is the gesture-controlled display device in the film Minority Report, where unneeded items can be swept out of the way. Although Minority Report also had flying cars, and while Harman’s system is futuristic, it’s not that futuristic.